Decentralised technologies interest me greatly. A sub-section of this genre is decentralised storage, which got its first mass debut with Napster. Napster was a “hybrid” model in that it used servers to coordinate peers… but not long after Napster other peer-2-peer services came along, like Kazaa, Kademlia (the basis for a myriad of p2p services), BitTorrent and very recently the boldly named InterPlantaryFileSystem RAWR. None of these protocols support monetisation. They are embodiments of the sharing economy.

More recently, companies have tried to produce decentralised storage systems which do monetise data storage. The big recent names have been Sia, Ubbey and Storj, with perhaps Urbit hanging around in the background. All of these fledgling systems have had major issues associated with them, which is an interesting discussion in itself. I’ve experimented with at least Sia and Ubbey previously.

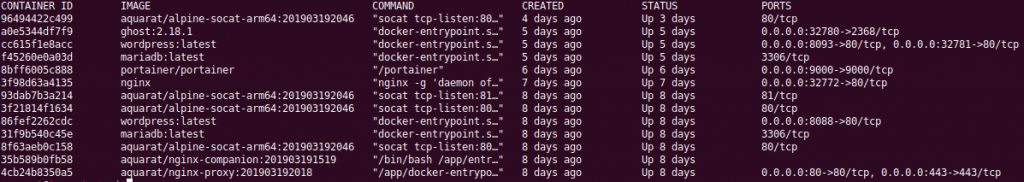

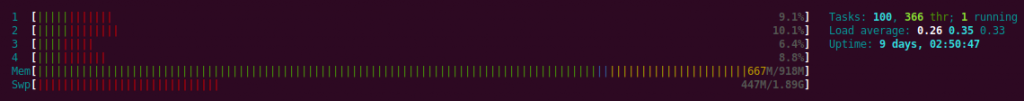

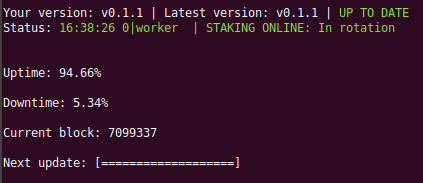

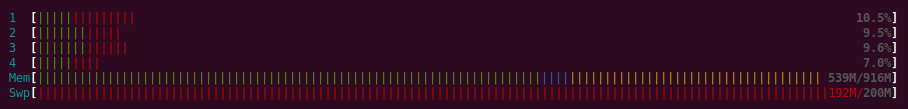

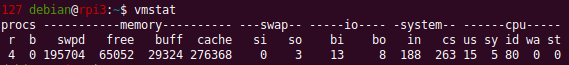

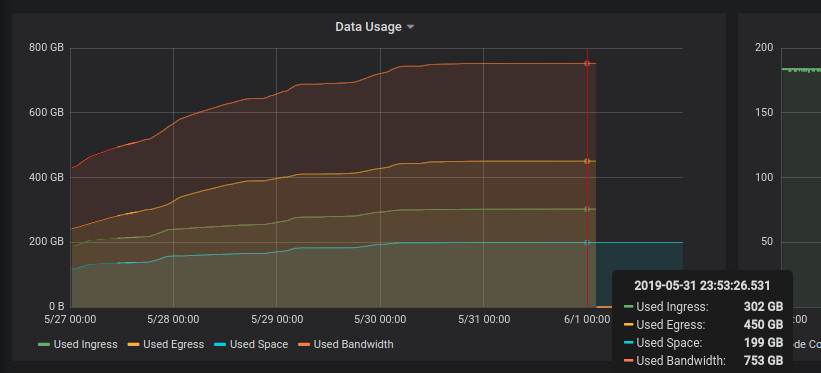

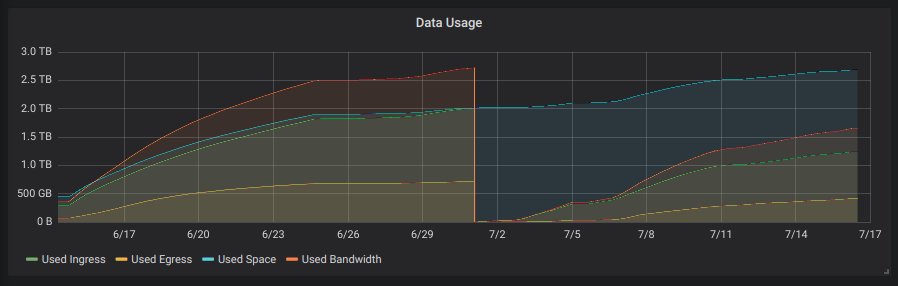

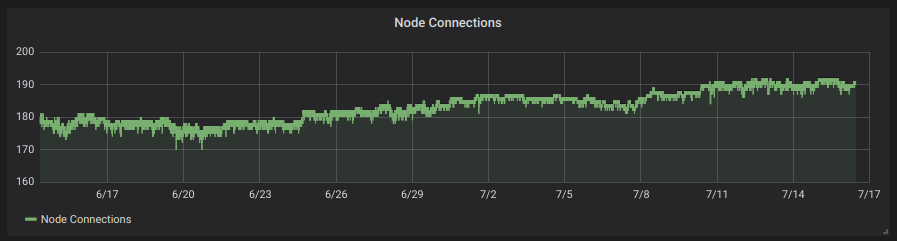

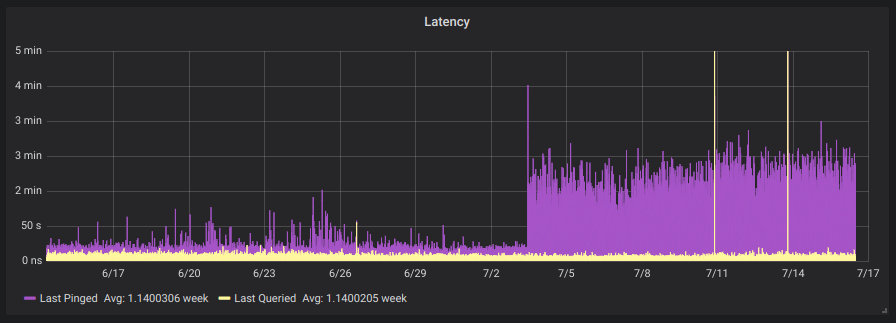

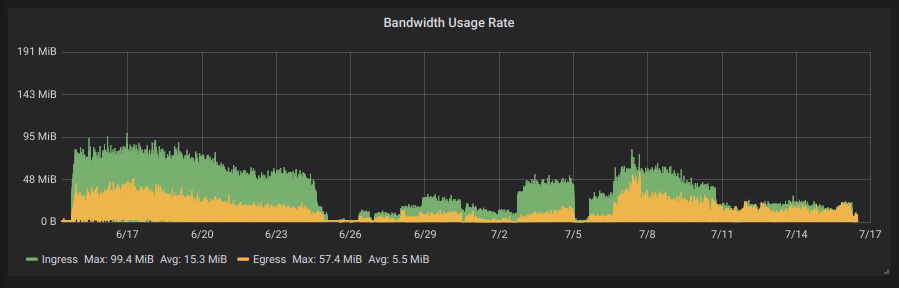

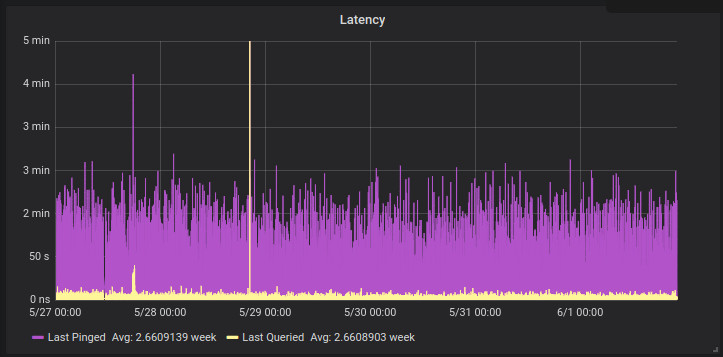

Storj recently announced that they were going to completely overhaul their network based on lessons learnt from their current system (v2) and asked for testers for their new system. I signed up and so far their software has been stable. I’ve written a small app which grabs telemetry from their daemon and inserts it into a Postgres database. Grafana is then used to graph this data.

To get a small logging system going you’ll need to do this :

Launch a Postgres instance on your Docker-enabled machine :

docker run --name storj-pg --restart=always -v /home/yourhome/pgdata:/var/lib/postgresql/data -d -e POSTGRES_USER="storj" -e POSTGRES_PASSWORD="storj" postgresLaunch Grafana (if you don’t already have it) :

docker run --restart=always -d -p 3000:3000 --name=grafana -v /home/yourhome/grafana-data:/var/lib/grafana --link storj-pg:storj-pg grafana/grafana

# You can also use a network to link these machines, which is the new way of doing things and is a fair bit sexier than the "link" directive.and then launch my app (to generate the initial config) :

docker run -ti --rm --detach=false -v /home/yourhome/telemetryconfig:/config aquarat/storj-postgres-logger:0.3a app -configfile=/config/config.jsonMy app will create a config file in the host directory, which you will need to modify to suit your installation. Once that’s done, relaunch it to check it works :

docker run --detach=false --rm --link storj-pg:storj-pg --link storagenode:storagenode -v /home/yourhome/telemetryconfig.json:/app/config.json aquarat/storj-postgres-logger:0.3aOnce you know it works you can relaunch it in the background :

docker run -d --name="pg-logger" --restart=always --link storj-pg:storj-pg --link storagenode:storagenode -v /home/yourhome/telemetryconfig:/config aquarat/storj-postgres-logger:0.3a app -configfile=/config/config.jsonYou can monitor the logger with docker logs -f pg-logger

The payload inserted into the table will look like this :

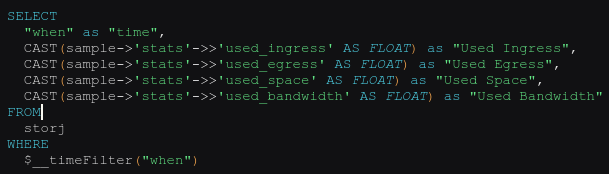

{"node_id":"xxxTz8ZhctRT7SmdWmwVMYYGhab1BkL2HXEH9LfCjvgBHTyEKvF","node_connections":183,"bootstrap_address":"bootstrap.storj.io:8888","external_address":"hansie.com:28967","stats":{"used_space":136240764928,"available_space":7863759235072,"used_ingress":213188739072,"used_egress":284742114304,"used_bandwidth":497930853376,"available_bandwidth":19502069146624},"uptime":{"seconds":1616778,"nanos":894016912},"last_pinged":{"seconds":1558951645,"nanos":705568818},"last_queried":{"seconds":1558951645,"nanos":938984110}}The associated Grafana query to pull these values out of the database looks like this :

Of course Docker isn’t required for any of this and “links” are only needed when containers aren’t on the same docker network.